In the primary grades students with reading difficulties may need intervention to prevent future reading failure. One way to help educators identify students in need of intervention and implement evidence-based interventions to promote their reading achievement is a framework called “Response To Intervention.”

The Education Department’s Institute of Education Sciences convened a panel to look at the best available evidence and expertise and formulate specific and coherent evidence-based recommendations to use Response To Intervention (RTI) to help primary grade students overcome reading struggles. The panel made five practice recommendations. The first recommendation is:

Screen all students for potential reading problems at the beginning of the year and again in the middle of the year.

First step in RTI

Universal screening is a critical first step in identifying students who are at risk for experiencing reading difficulties and who might need more instruction.

Screening should take place at the beginning of each school year in kindergarten through grade 2. Schools should use measures that are efficient, reliable, and reasonably valid. For students who are at risk for reading difficulties, progress in reading and reading related-skills should be monitored on a monthly or even a weekly basis to determine whether students are making adequate progress or need additional support (see recommendation 4 in the full guide for further detail). Because available screening measures, especially in kindergarten and grade 1, are imperfect, schools are encouraged to conduct a second screening mid-year.

Level of evidence: “moderate”

The panel judged the level of evidence for recommendation 1 to be moderate. This recommendation is based on a series of high quality correlational studies with replicated findings that show the ability of measures of reading proficiency administered in grades 1 and 2 to predict students’ reading performance in subsequent years (Compton et al., 2006; McCardle et al., 2001; O’Connor and Jenkins, 1999; Scarborough, 1998; Fuchs, Fuchs, and Compton, 2004; Speece, Mills, Ritchey, and Hillman, 2003).

However, it should be cautioned that few of the samples used for validation adequately represent the U.S. population as required by the Standards for Educational and Psychological Testing. (AERA et al., 1999).

The evidence base in kindergarten is weaker, especially for measures administered early in the school year (Jenkins and O’Connor, 2002; O’Connor and Jenkins, 1999; Scarborough, 1998; Torgesen, 2002; Badian, 1994; Catts, 1991; Felton, 1992).

Thus, our recommendation for kindergarten and for grade 1 is to conduct a second screening mid-year when results tend to be more valid (Compton et al., 2006; Jenkins, Hudson, and Johnson, 2007).

How to carry out this recommendation

1. Create a building-level team to facilitate the implementation of universal screening and progress monitoring.

In the opinion of the panel, a building-level RTI team should focus on the logistics of implementing school-wide screening and subsequent progress monitoring, such as who administers the assessments, scheduling, and make-up testing, as well as substantive issues, such as determining the guidelines the school will use to determine which students require intervention and when students have demonstrated a successful response to tier 2 or tier 3 intervention.

Although each school can develop its own benchmarks, it is more feasible, especially during the early phases of implementation, for schools to use guidelines from national databases (often available from publishers, from research literature, or on the Office of Special Education Programs (OSEP) Progress Monitoring and RTI websites (see National Center on Response to Intervention or National Center on Student Progress Monitoring ).

2. Select a set of efficient screening measures that identify children at risk for poor reading outcomes with reasonable accuracy.

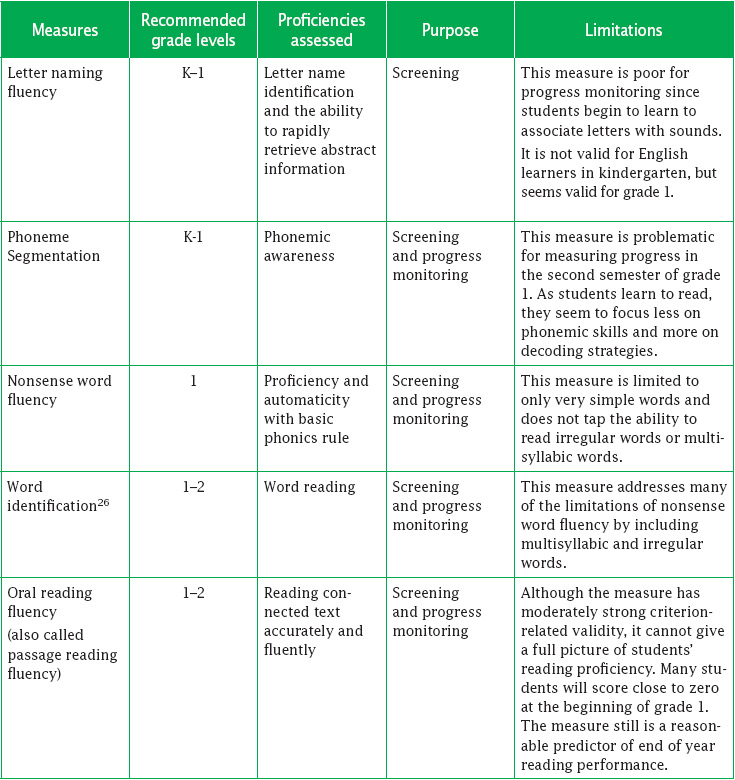

As children develop, different aspects of reading or reading-related skills become most appropriate to use as screening measures. The table below highlights the skills most appropriate for each grade level. Some controversy remains about precisely which one skill is best to assess at each grade level. For that reason, we recommend the use of two screening measures at each juncture. The table also outlines some commonly used screening measures for kindergarten through grade 2 highlighting their focus, purpose, and limitations. The limitations are based on the opinion of the panel.

Table 1: Recommended target areas for early screening and progress monitoring

(Source: Authors’ compilation based on Baker and Baker, 2008; Baker et al., 2006;Compton et al., 2006; Fuchs et al., 2004; Fuchs et al., 2001b; Fuchs, Fuchs, and Maxwell, 1988; Fuchs et al., 2001a; Gersten, Dimino, and Jayanthi, 2008; Good, Simmons, and Kame’enui, 2001;O’Connor and Jenkins, 1999; Schatschneider, 2006; Speece and Case (2001);Speece et al. 2003; *)

Kindergarten screening batteries should include measures assessing letter knowledge, phonemic awareness, and expressive and receptive vocabulary (Jenkins and O’Connor, 2002; McCardle et al., 2001; O’Connor and Jenkins, 1999; Scarborough, 1998a; Torgesen, 2002). Unfortunately, efficient screening measures for expressive and receptive vocabulary are in their infancy.

As children move into grade 1, screening batteries should include measures assessing phonemic awareness, decoding, word identification, and text reading (Foorman et al., 1998). By the second semester of grade 1 the decoding, word identification, and text reading should include speed as an outcome (Compton et al., 2006; Fuchs et al., 2004).

Grade 2 batteries should include measures involving word reading and passage reading. These measures are typically timed. Despite the importance of vocabulary, language, and comprehension development in kindergarten through grade 2, very few research-validated measures are available for efficient screening purposes. But diagnostic measures can be administered to students who appear to demonstrate problems in this area.

Technical characteristics to consider

The panel believes that three characteristics of screening measures should be examined when selecting which measures (and how many) will be used.

Reliability of screening measures (usually reported as internal consistency reliability or Cronbach’s alpha) should be at least 0.70. Nunnally (1978). This information is available from the publishers’ manual or website for the measure.

Predictive validity is an index of how well the measure provides accurate information on future reading performance of students — and thus is critical. In the opinion of the panel, predictive validity should reach an index of 0.60 or higher. Reducing the number of false positives identified — students with scores below the cutoff who would eventually become good readers even without any additional help — is a serious concern. False positives lead to schools providing services to students who do not need them. In the view of the panel, schools should collect information on the sensitivity of screening measures and adjust benchmarks that produce too many false positives. There is a tradeoff, however, with the specificity of the measure and its ability to correctly identify 90 percent or more of students who really do require assistance (Jenkins, 2003). Using at least two screening measures can enhance the accuracy of the screening process; however, decision rules then become more complex.

Costs in both time and personnel should also be considered when selecting screening measures. Administering additional measures requires additional staff time and may displace instruction. Moreover, interpreting multiple indices can be a complex and time-consuming task. Schools should consider these factors when selecting the number and type of screening measures.

Use benchmarks or growth rates (or a combination of the two) to identify children at low, moderate, or high risk for developing reading difficulties. Schatschneider (2006).

Use cut-points to distinguish between students likely to obtain satisfactory and unsatisfactory reading proficiency at the end of the year without additional assistance. Excellent sources for cut-points are any predictive validity studies conducted by test developers or researchers based on normative samples. Although each school district can develop its own benchmarks or cut-points, guidelines from national databases (see Center on Multi-Tiered Systems of Support , formerly the National Center on Response to Intervention) may be easier to adopt, particularly in the early phases of implementation. As schools become more sophisticated in their use of screening measures, many will want to go beyond using benchmark assessments two or three times a year and use a progress monitoring system.

Roadblocks and suggested approaches

Roadblock 1.1. It is too hard to establish district-specific benchmarks.

Suggested Approach. National benchmarks can assist with this process. It often takes a significant amount of time to establish district-specific benchmarks or standards. By the time district-specific benchmarks are established, a year could pass before at-risk readers are identified and appropriate instructional interventions begin. National standards are a reasonable alternative to establishing district-specific benchmarks.

Roadblock 1.2. Universal screening falsely identifies too many students.

Suggested Approach. Selecting cut-points that accurately identify 100 percent of the children at risk casts a wide net-also identifying a sizeable group of children who will develop normal reading skills. We recommend using universal screening measures to liberally identify a pool of children that, through progress monitoring methods, can be further refined to those most at risk (Compton et al. (2006). Information on universal screening and progress monitoring measures can be found at the the Center on Multi-Tiered Systems of Support (formerly the National Center on Response to Intervention) or the Iris Center at Vanderbilt University .

Roadblock 1.3. Some students might get “stuck” in a particular tier.

Suggested Approach. If schools are responding to student performance data using decision rules, students should not get stuck. A student may stay in one tier because the instructional match and learning trajectory is appropriate. To ensure students are receiving the correct amount of instruction, schools should frequently reassess-allowing fluid movement across tiers. Response to each tier of instruction will vary by student, requiring students to move across tiers as a function of their response to instruction. The tiers are not standard, lock-step groupings of students. Decision rules should allow students showing adequate response to instruction at tier 2 or tier 3 to transition back into lower tiers with the support they need for continued success.

Roadblock 1.4. Some teachers place students in tutoring when they are only one point below the benchmark.

Suggested Approach. No measure is perfectly reliable. Keep this in mind when students’ scores fall slightly below or above a cutoff score on a benchmark test. The panel recommends that districts and schools review the assessment’s technical manual to determine the confidence interval for each benchmark score. If a students’ score falls within the confidence interval, either conduct an additional assessment of those students or monitor their progress for a period of six weeks to determine whether the student does, in fact, require additional assistance (Francis et al. (2005).)

Gersten, R., Compton, D., Connor, C.M., Dimino, J., Santoro, L., Linan-Thompson, S., and Tilly, W.D. (2008). Assisting students struggling with reading: Response to Intervention and multi-tier intervention for reading in the primary grades. A practice guide. (NCEE 2009-4045). Washington, DC: National Center for Education Evaluation and Regional Assistance, Institute of Education Sciences, U.S. Department of Education. Retrieved from http://ies.ed.gov/ncee/wwc/publications/practiceguides/.